computational linguistics (CL)

What is computational linguistics (CL)?

Computational linguistics (CL) is the application of computer science to the analysis and comprehension of written and spoken language. As an interdisciplinary field, CL combines linguistics with computer science and artificial intelligence (AI) and is concerned with understanding language from a computational perspective. Computers that are linguistically competent help facilitate human interaction with machines and software.

Computational linguistics is used in tools like instant machine translation, speech recognition systems, text-to-speech synthesizers, interactive voice response systems, search engines, text editors and language instruction materials.

Typically, computational linguists are employed in universities, governmental research labs or large enterprises. In the private sector, vertical companies typically employ computational linguists to authenticate the accurate translation of technical manuals. Tech software companies, such as Microsoft, typically hire computational linguists to work on natural language processing (NLP), helping programmers to create voice user interfaces that enable humans to communicate with computing devices as if they were another person.

A computational linguist is required to have expertise in machine learning (ML), deep learning, AI, cognitive computing and neuroscience. Individuals pursing a job as a linguist generally need a master's or doctoral degree in a computer science-related field or a bachelor's degree with work experience developing natural language software.

The term computational linguistics is also very closely linked to NLP, and these two terms are often used interchangeably.

Goals of computational linguistics

Business goals of computational linguistics include the following:

- Create grammatical and semantic frameworks for characterizing languages.

- Translate text from one language to another.

- Retrieve text that relates to a specific topic.

- Analyze text or spoken language for context, sentiment or other affective qualities.

- Answer questions, including those that require inference and descriptive or discursive answers.

- Summarize text.

- Build dialogue agents capable of completing complex tasks such as making a purchase, planning a trip or scheduling maintenance.

- Create chatbots capable of passing the Turing Test.

CL vs. NLP

Computational linguistics and natural language processing are similar concepts, as both fields require formal training in computer science, linguistics and machine learning. Both use the same tools, such as machine learning and AI, to accomplish their goals, and many NLP tasks need an understanding or interpretation of language.

Where NLP deals with the ability of a computer program to understand human language as it is spoken and written, CL focuses on the computational description of languages as a system. Computational linguistics also leans more toward linguistics and answering linguistic questions with computational tools; NLP, on the other hand, involves the application of processing language.

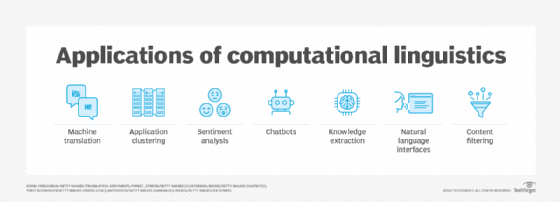

Applications of computational linguistics

Most work in computational linguistics -- which has both theoretical and applied elements -- is aimed at improving the relationship between computers and basic language. It involves building artifacts that can be used to process and produce language. Building such artifacts requires data scientists to analyze massive amounts of written and spoken language in both structured and unstructured formats.

Applications of CL typically include the following:

- Machine translation. This is the process of using AI to translate one human language to another.

- Application clustering. This is the process of turning multiple computer servers into a cluster.

- Sentiment analysis. This approach to NLP identifies the emotional tone behind a body of text.

- Chatbots. These software or computer programs simulate human conversation or chatter through text or voice interactions.

- Knowledge extraction. This is the creation of knowledge from structured and unstructured text.

- Natural language interfaces. These are computer-human interfaces where words, phrases or clauses act as user interface controls.

- Content filtering. This process blocks various language-based web content from reaching end users.

Approaches and methods of computational linguistics

There have been many different approaches and methods of computational linguistics since its beginning in the 1950s. Examples of some CL approaches include the following:

- The corpus-based approach, which is based on the language as it is practically used.

- The comprehension approach, which enables the NLP engine to interpret naturally written commands in a simple rule-governed environment.

- The developmental approach, which adopts the language acquisition strategy of a child -- acquiring language over time. The developmental process has a statistical approach to studying language and does not take grammatical structure into account.

- The structural approach, which takes a theoretical approach to the structure of a language. This approach uses large samples of a language run through CL models so it can gain a better understanding of the underlying language structures.

- The production approach, which focuses on a CL model to produce text. This has been done in a number of ways, including the construction of algorithms that produce text based on example texts from humans.

- The text-based interactive approach, in which text from a human is used to generate a response by an algorithm. A computer is able to recognize different patterns and reply based on user input and specified keywords.

- The speech-based interactive approach, which works similarly to the text-based approach, but the user input is made through speech recognition. The user's speech input is recognized as sound waves and is interpreted as patterns by the CL system.

History of computational linguistics

Although the concept of computational linguistics is often associated with AI, CL predates AI's development, according to the Association for Computational Linguistics. One of the first instances of CL came from an attempt to translate text from Russian to English. The thought was that computers could make systematic calculations faster and more accurately than a person, so it would not take long to process a language. However, the complexities found in languages were underestimated, taking much more time and effort to develop a working program.

Two programs were developed in the early 1970s that had more complicated syntax and semantic mapping rules. SHRDLU was a primary language parser developed in 1971 by computer scientist Terry Winograd at MIT. SHRDLU combined human linguistic models with reasoning methods. This was a major accomplishment for natural language processing research.

Also in 1971, NASA developed Lunar and demonstrated it at a space convention. The Lunar system answered convention attendees' questions about the composition of the rocks returned from the Apollo moon missions.

Translating languages was a difficult task before this, as the system had to understand grammar and the syntax in which words were used. Since then, strategies to implement CL began moving away from procedural approaches to ones that were more linguistic, understandable and modular. In the late 1980s, computing processing power increased, which led to a shift to statistical methods when considering CL. This is also around the time when corpus-based statistical approaches were developed.

Modern CL relies on many of the same tools and processes as NLP. These systems may use a variety of tools, including AI, ML, deep learning and cognitive computing. As an example, GPT-3, or the third-generation Generative Pre-trained Transformer, is a neural network machine learning model that produces text based on user input. It was released by OpenAI in 2020 and was trained using internet data to generate any type of text. The program requires a small amount of input text to generate large relevant volumes of text. GPT-3 is a model with over 175 billion machine learning parameters. Compared to the largest trained language model before this, Microsoft's Turing-NLG model only had 17 billion parameters.

Learn about 20 different courses for studying AI, including courses at Cornell University, Harvard University and the University of Maryland, which offer content on computational linguistics.